WWDC 2020 opens next June 22nd and all indications are that the highest-impact announcement will be the Mac’s migration from Intel to the ARM architecture.

While CPU architecture migrations are infrequent — they happen every decade or so — Apple has a good track record of pulling them off successfully.

The first major migration was the move from Motorola 68K to PowerPC chips around 1994, followed by moving from the Classic Mac System 9 to Mac OS X around 2000. Relevant here was that for some time Mac OS X ran older applications in the “Classic Environment”: a compatibility sandbox that emulated the APIs of System 9 and the instruction set of the 68K.

This worked reasonably well as PowerPC CPUs were several times faster than the old 68K ones. It also introduced the concept of “fat binaries“; the same application file contained code for both old and new environments.

A better historical precedent is the move from PowerPC to Intel processors in 2006. This was more traumatic for developers, as PowerPCs were “big-endian” and Intel CPUs were “little-endian”. This meant that, except for strings, values stored in memory, files or transmitted over networks had a different byte sequence ordering. To have the same program source code work on both systems you could no longer assume it would just work, but had to bracket your instructions with macros or function calls that would do nothing on one platform but swap bytes around on the other.

If you’re not an oldtimer like myself you probably never had to think about this — every Mac, iPhone, iPad, Apple Watch or Apple TV use little-endian values, and I even had to dig into documentation to make sure of it. ARM CPUs can be run in big-endian mode by setting a special bit at boot time but this is not the default, and no Apple device uses that mode.

Now, this meant that in 2006 developers could not just migrate their apps to Intel by recompiling; we had to look through every line to either check that it was endian-neutral, and if it wasn’t, those special macros had to be used. For people who had very CPU-specifically optimized code — perhaps even in (shudder) assembly language — separate code sections were necessary.

Having done all this, you recompiled your app twice; once for PowerPC, once for Intel; and the magic of fat binaries allowed you to ship it all in one app. Later on, some apps even needed 3 or 4 different code sections, depending not only on endianness but also on whether they would run on a 32- or 64-bit CPU!

Another — today mostly forgotten — aspect was that Apple prepared for the Intel migration by gradually modernizing and building their developer toolchain in-house. LLVM, Clang, LLDB etc. allowed Apple to ensure that, for whatever CPU they wanted to support, compilers were ready beforehand and could be optimized continuously later on, without depending on outsiders.

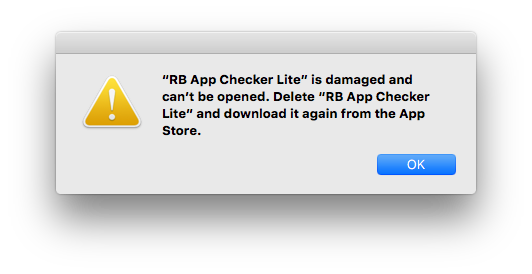

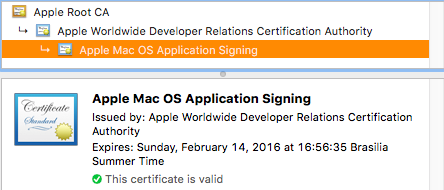

Still, in 2006 Apple had to ship special hardware, “Developer Transition Kits”, to select developers for testing. For software that couldn’t be converted to the new architecture, Apple introduced a limited compatibility box: Rosetta. If I recall correctly, it did on-the-fly translation of PowerPC code into Intel code, which was then cached. Because of its limitations it didn’t work for many larger applications and was soon phased out.

Moving in parallel to the PowerPC to Intel migration was a slower-motion shift in operating system APIs. Most notably, this involves Carbon and Cocoa.

Carbon was a C-based API introduced in 2000 to ease migration from Classic System 9 to Mac OS X. Cocoa, introduced around the same time, was an Objective-C based API for modern object-oriented programming, itself an evolution of NeXT’s OpenStep system. Underneath both APIs, in the now well-known layer model, was Core Foundation, which could be used from both types of apps; and some apps (like my own) could mix calls to both APIs with some care.

Not too long after the Intel migration, Apple announced that 64-bit was the future, and that Carbon would not be migrated to that environment. This process was stretched over several years and involved redefining what APIs were really considered “Carbon”; some, like the File Manager, were “de-carbonized” and lived on until macOS 10.5 (Catalina) came out.

Cocoa, on the other hand, continues to be used everywhere in macOS. The Finder, the Dock, Xcode, and Safari are all Cocoa apps. Even when Swift came out a few years ago most of it was built on top of Cocoa and Objective-C objects; the notable exception is the Swift toolchain itself.

So, after all this, here we’re looking at Yet Another Hardware Migration for Macs. Let’s look at the implications.

Economically, it makes sense for Apple, as many others have already commented. They’ll no longer be bound to a foreign evolution roadmap on which they have little influence. They have extensive experience in producing high-performance, low-power CPUs for their mobile devices, and the latest versions already outperform Intel in some situations.

Technically, it’s a huge win. Switching to ARM64 — and not just the standard ARMv8.x architecture licensed from ARM, but with their own, extensive modifications — will allow them to have unified GPUs, Neural Engines, memory controllers and so forth on all their line, with more uniform device drivers and low-level programming.

For 99% of developers, I think nothing will change. The new chips are little-endian also, so a simple recompile will have Xcode produce a fat binary for the new Macs which should run outright. Of course, if you have assembly language sections in your program and/or write kernel extensions/device drivers, time to learn a new architecture…

Snags will come for people who dislike, or can’t use, Xcode. Some have to use Intel’s compilers, for instance; I know too little about such cases to have an informed opinion, sorry.

Some pundits seem to expect a sudden concurrent change in macOS; something like Objective-C and/or Cocoa being obsoleted in favor of Swift and SwiftUI. Or even the Mac going away entirely, some sort of huge desktop iPad taking its place. In my view this won’t happen. For one, what would developers or even most Apple engineers use for development?

A big question is: will Apple be able to provide an Intel compatibility box on the ARM Macs? Certainly Boot Camp will not be available. Running a virtualizer like VMware Fusion or Parallels seems almost as difficult, unless the new CPUs have some sort of hardware assist to decode x86-64 instructions. This may not be as outlandish as it sounds; current Intel/AMD processors already break x86 CISC instructions into RISC micro-operations which are then cached and executed by the “inner” CPU. This is a gross oversimplification but in theory nothing — except silicon space — bars Apple from breaking x86 instructions into ARM instructions.

A Rosetta-like box seems more feasible for running individual Intel applications, but who needs that? Game users? Performance will be limited. Most virtualizer app users want the complete OS running and with native speed. Linux/BSD might be available soon; perhaps Windows for ARM.

But what about Catalyst, some of you may ask? Here I can only shrug. In its present form it certainly is not an important future technology for macOS. While simple apps can be done with it — perhaps purely for the benefit of developers unfamiliar with AppKit — can you envision a Catalyst Finder? SwiftUI is still very new and primitive, and will continue to be layered on top of AppKit/UIKit for some time. They may merge in the future, or be renamed gradually like Carbon was, but that’s a long time out.

Finally, hardware. I don’t think the existing A13 SoCs would be applicable to any Mac, though. Some version of the Mac mini would be the obvious candidate to be the first to get the all-new CPU. It would then percolate up through the laptop line and the iMac. In these cases, reduced power usage would be a bonus — even for the iMac, it would mean a smaller power supply, less heat and a thinner enclosure.

The Mac Pro should be the last Intel redoubt. Multiple CPUs, OEM graphic cards, generic PCIe cards — Apple will have to address a huge range of problems there and this will take years.

Enough handwaving for now; the usual disclaimers apply and I’m really looking forward to the keynotes next week.

Update:

— corrected date for the 68K->PowerPC migration. Thanks to Chris Adamson for catching the error.

— fixed some awkward language about virtualization. Thanks to Maurício Sadicoff.